Apple will scan photos on iPhones and iCloud for child abuse imagery using a system called neuralMatch. The system alerts human reviewers should potential illegal imagery be detected. Law enforcement would then be informed once the imagery is verified.

neuralMatch, trained using images from the National Center for Missing & Exploited Children, will first be used in the US. The system works by hashing and comparing photos to a known database of child abuse imagery.

Every photo uploaded to iCloud in the US is given a ‘safety voucher’ identifying each photo as either suspect or not. Once a threshold of suspect photos is breached, Apple will allow the suspect photos to be decrypted and passed onto relevant authorities if necessary.

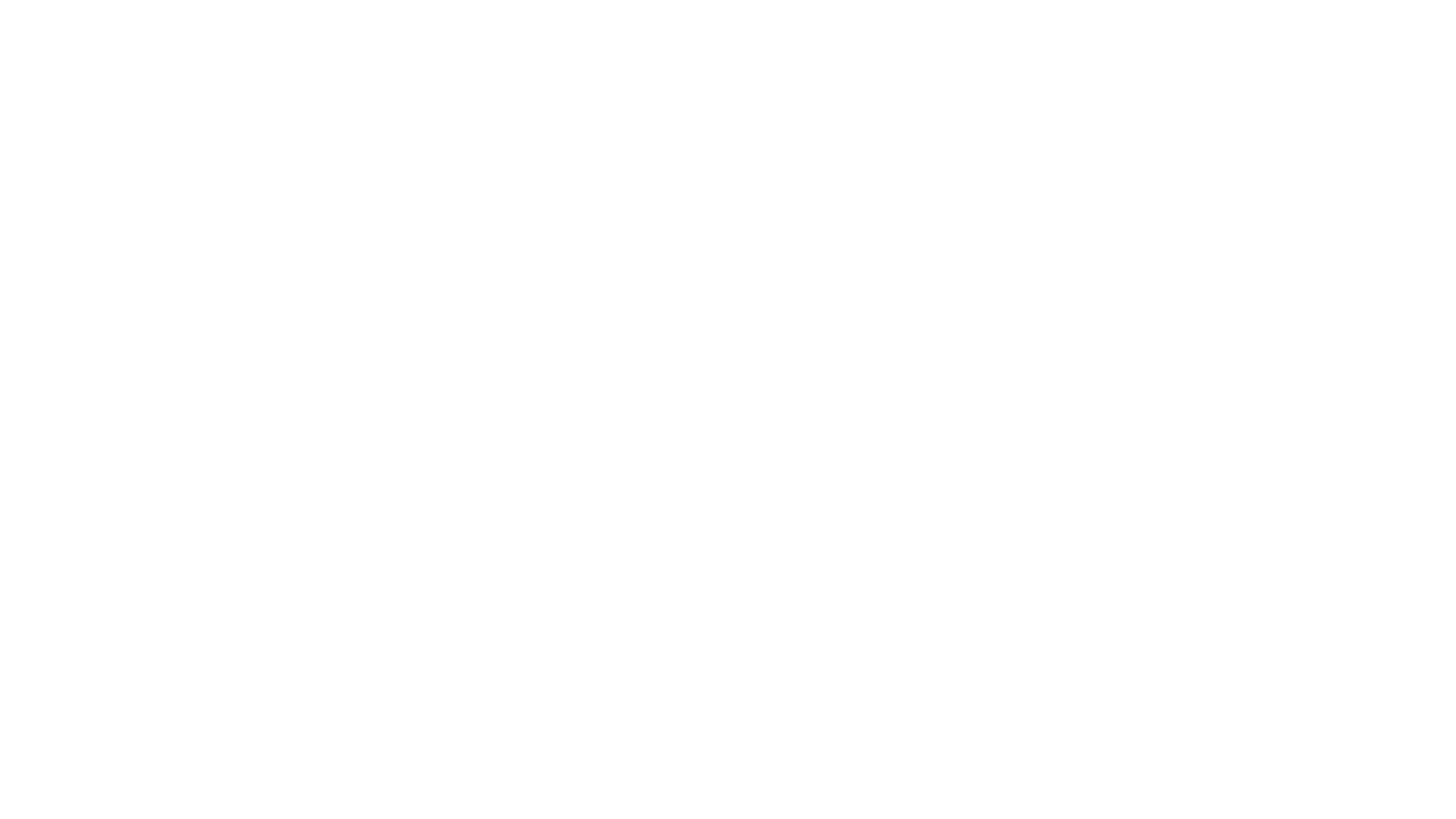

The Messages app will also include new tools to warn children and parents when sexually explicit photos are being exchanged.

For example, when receiving an explicit image, the photo will be blurred and the child will be warned, provided with helpful resources, and reassured that it’s okay if they don’t want to view the photo. This feature, however, uses on-device machine learning so Apple doesn’t get access to the messages.

This system could help in criminal investigations, but it could also lead to increased government and legal demand for user data. While Apple already checks iCloud images against known child abuse imagery, this system would allow access to local storage.

After all, it will be hard to distinguish child abuse imagery or pornography without scanning through all images a user has in the first place. This opens up a scary precedent which might be brushed off by users with a shrug because of the sake of convenience.

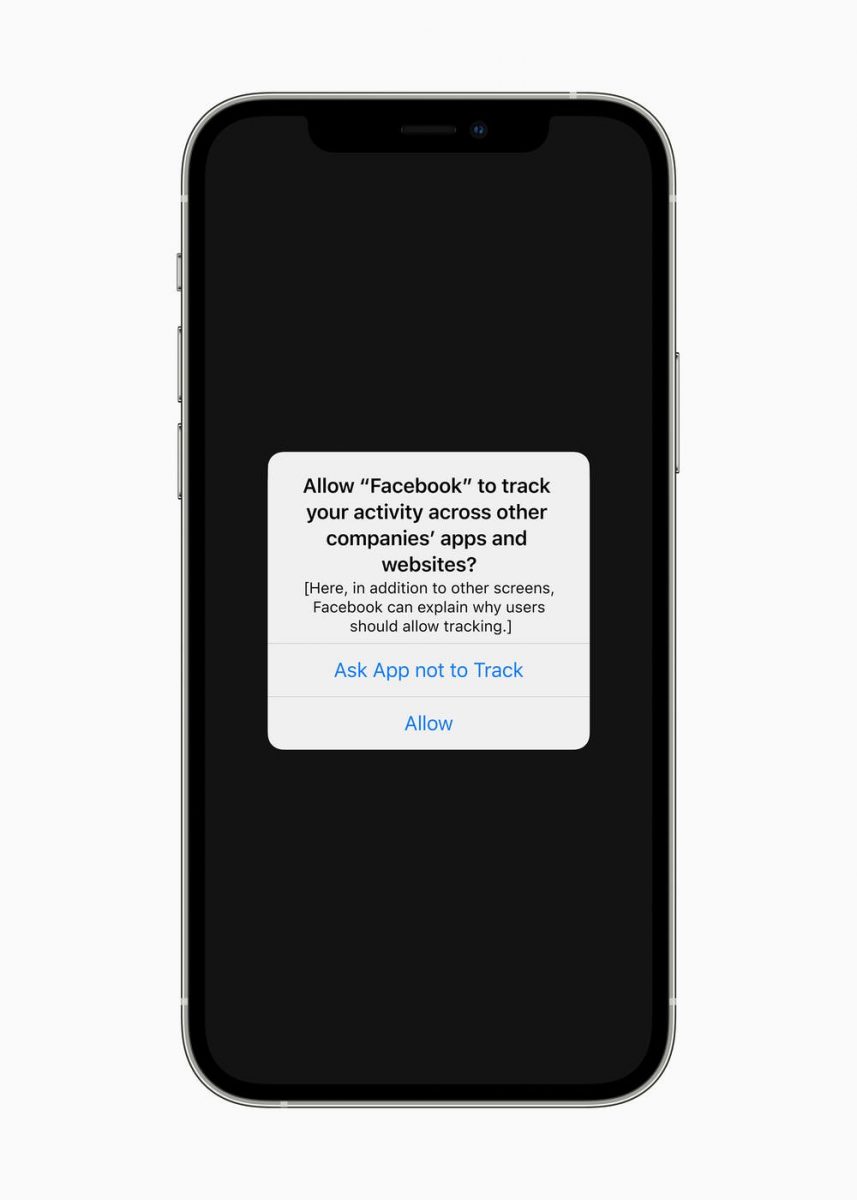

While Apple has been bolstering user privacy in recent years, such as with the App Tracking Transparency feature, the potential reach of this new system means that the trust Apple has built up over the years might take a hit.