Google has unveiled a number of new products and AI innovations at their second annual Search On event. The tech titan continues to improve the way we search for information and how information can be made helpful to us in unexpected and creative ways.

One of the biggest innovations is the advanced AI system, Multitask Unified Model (MUM), which is a technology that can simultaneously understand information across a slew of formats like text, images, and videos. It is able to identify and draw connections between concepts, topics, and ideas about the world around us.

MUM will redesign the way Google Search works, allowing for more natural and intuitive ways to search. There will be features introduced to either refine or broaden searches, which will allow users to better fine-tune the information they are trying to unearth. There will also be a visually browsable results page that is easy to navigate and can serve as a source of inspiration when prospecting for visual ideas.

The system can also identify related topics in videos, even if the topics aren’t explicitly mentioned, which makes it much easier to dig deeper and learn more about said topics.

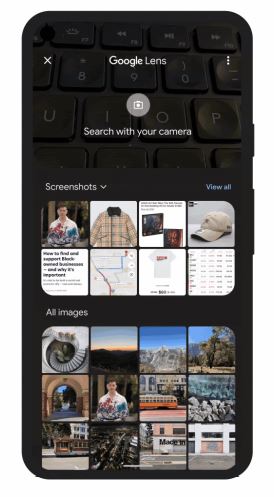

And soon, there will Lens Mode for iOS users and on desktop in Chrome. It is a way for images on a page to become searchable, so users can seamlessly do image searches through the Google app on iOS, or conveniently select images, videos, and text content on a website and view search results all while on the same tab on desktop.

For the apparel shoppers, it is also now easier to browse for more clothes on mobile. For example, when “cropped jackets” is entered as a search term, there will be a visual feed of cropped jackets along with information such as local shops, style guides, and so on presented to make the online shopping experience fuss-free. There will also be an “in-store” filter option available to check if nearby stores have specific items in stock. This is a useful feature because nowadays, shopping happens online first, with research about prices and availability, before one would head out physically to make purchases.

The “About This Result” panels will be expanded to include more useful insights about the sources and topics one may be learning about on Search. Beyond seeing a source description on Wikipedia, one will be able to read what a site says about itself whenever that information is available. Also, news, reviews, and helpful background context can be easily seen to help one better determine if the sources are reliable. And finally, in the “About the topic” section, one can find out about the top news coverage and results about the same topic from other sources.

While many of these new features and innovations will only be available in English in the coming months, with some, such as the visually browsable results page, refined shopping searches, and “About This Result” additions being available only in the US for now, Google will definitely be making further refinements and tweaks as the reach of all these new features expand. Given Google’s propensity for improvements that integrate into users’ lives invisibly, it is possible that the advances in terms of how users search for information will become indispensable one day.