- Shares

- 48

For a company that has politely abstained from using the term ‘Artificial Intelligence’ in describing the machine learning and generative text, audio, and visual software that has captured the attention of the tech landscape in the last two years, Apple has certainly found a way to cleverly co-opt the AI acronym.

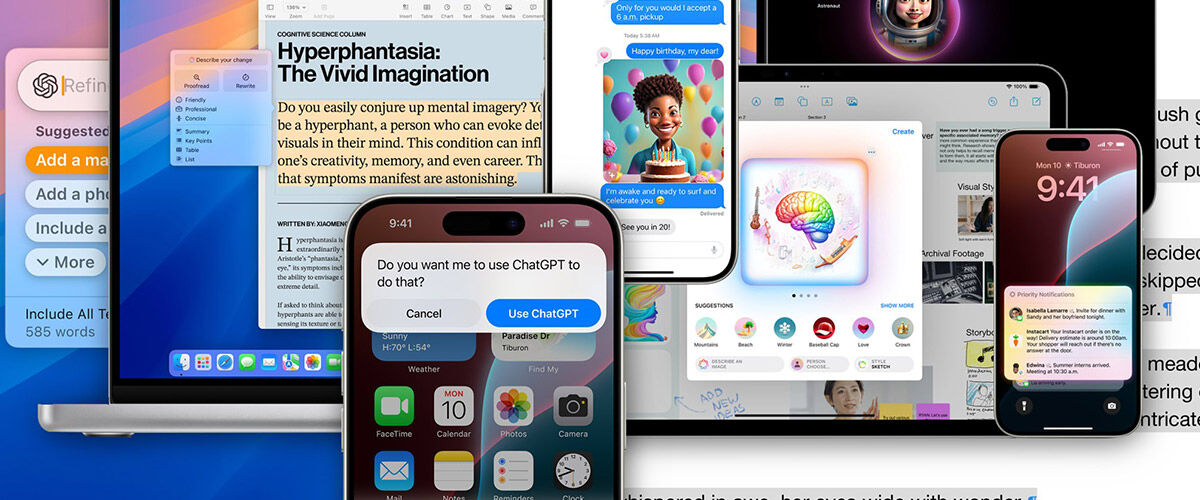

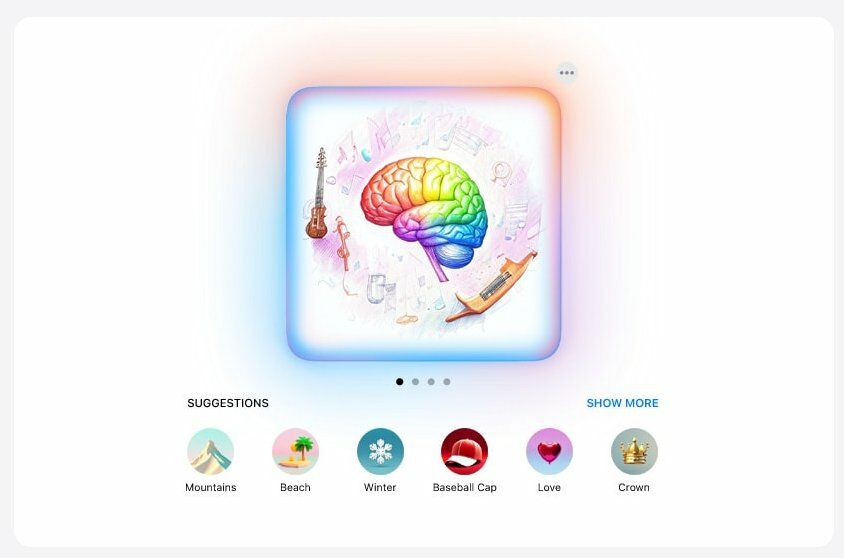

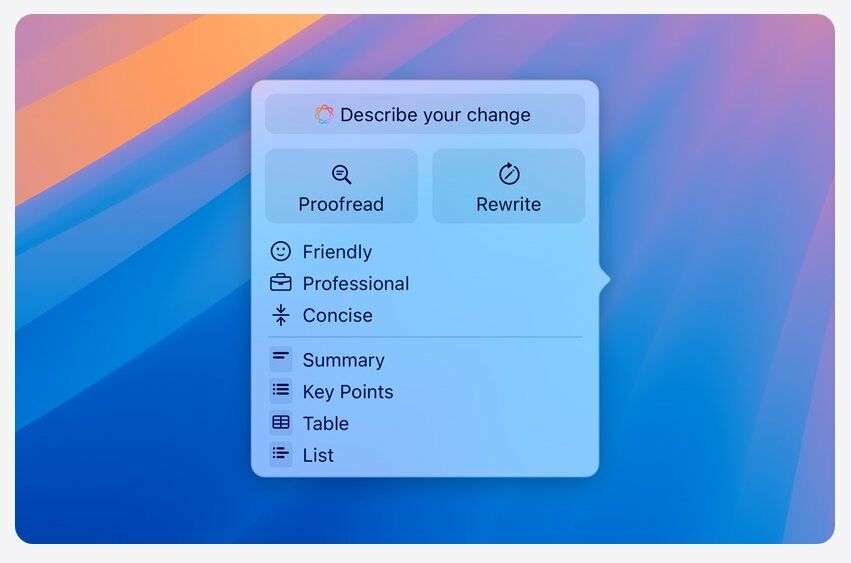

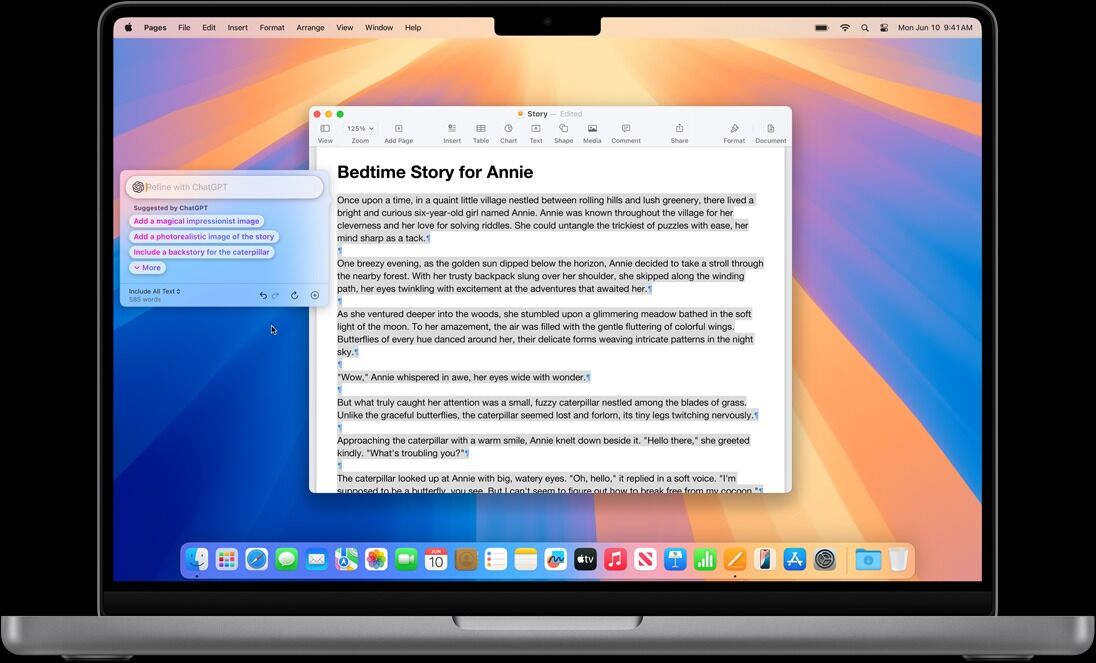

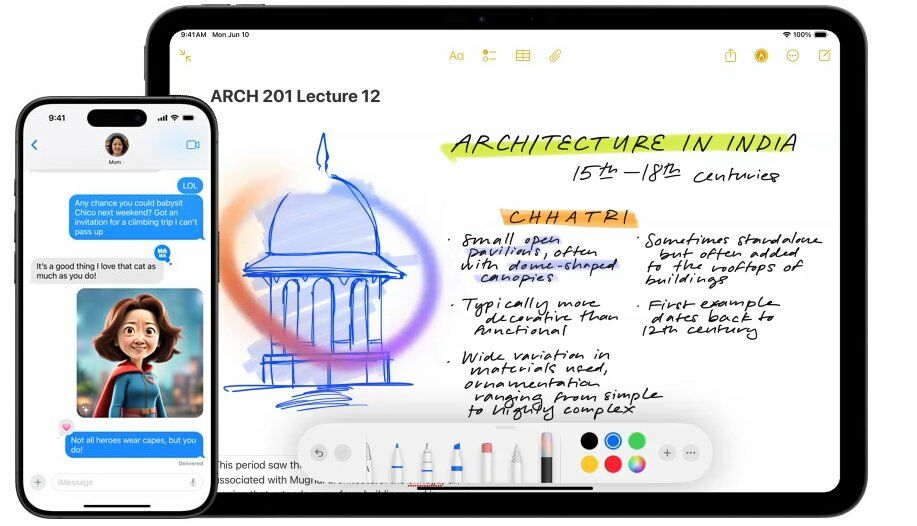

Apple Intelligence, announced at the company’s annual Worldwide Developer’s Conference (WWDC) in its Apple Park headquarters, is the new suite of AI features for the iPhone, iPad and Mac machines running the upcoming iOS 18, iPadOS 18, and macOS Sequoia. Instead of a mere app or series of hardware that will inject AI into Apple’s ecosystem, think of Apple’s generative AI deployment as a series of tools and features, ranging from AI-generated emojis (Genmoji), an enhanced Siri that will also tap on the world’s leading learning model, ChatGPT, through a partnership with its designer OpenAI, to image generation, either via on-device or cloud-powered generative AI models, that device users can use to enhance their daily lives.

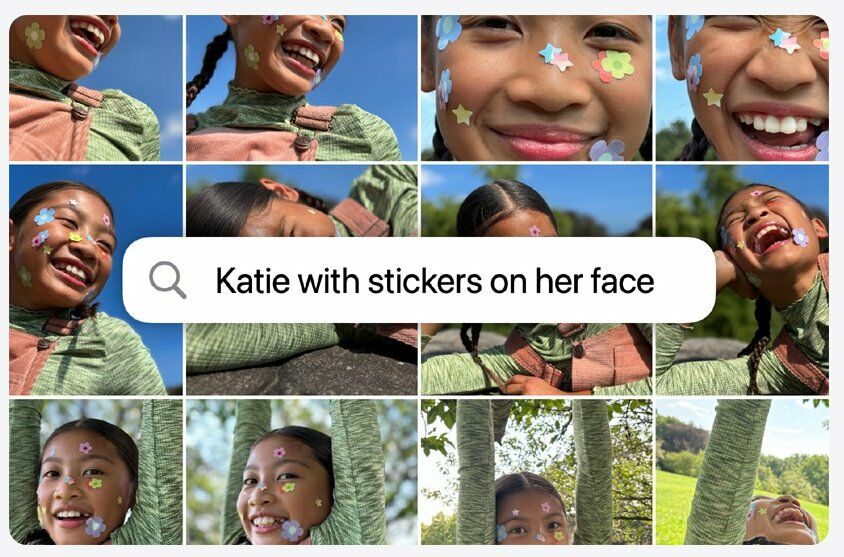

It’s like using spellcheck or grammar tool on a document – there’s no need to turn anything on and if there is a spelling error or grammar recommendation, the software can flag it without the need for the user to know the proper spelling or understand how it is happening. From screening composed emails to offer contextualised writing recommendations, to having digital assistant Siri be able to understand a more conversational tone during voice interactions, to simplifying photo editing and even searching in your library of hundreds, if not thousands of images, Apple Intelligence effectively does away with the more mundane manual steps that define tasks. By focusing on the results and experience by working seamlessly in the background, users don’t consciously think of it in action, even though it’s clearly present.

Say you want to identify a photograph of a person taken in the park on your phone, and want the image enhanced before adding it to that individual’s contact card on your phone – you can now ask Siri to identify the image on your phone, have the necessary edits made, before the final image is added to your contact card.

Searches via Siri are now less contingent on having precise verbal instructions and are more contextual instead, so you can do follow-up queries that the software will now recognise as being associated, such as locating a restaurant, getting a reservation and then putting a calendar block for the event.

Remember that document, file or photo that your colleague sent you last week? Was it over email, WhatsApp or Telegram? Just ask Siri instead of trying hard to recall where is sits.

For those using Apple Pencil on the iPad, the software recognises handwriting and can clean up scribbles or misspellings, and even make edits following the user’s writing style.

All of this is enabled by the power of Apple silicon, to use machine learning to understand and create or improve on written language, take actions across apps, and simplify tasks. While the rollout is contingent on the latest device software operating systems, there is also a hardware element.

For Mac machines and the iPad, you need to be using the new M family of processors, and on the phone, it needs to be powered by the A17 Pro processor, which is currently only on the iPhone 15 Pro and Pro Max models.

This requirement is hardly surprising, as the newly introduced features tend to only work on the latest iteration of hardware but Apple’s announcement is notable in several aspects.

The first M1 chip debuted in 2020, which means any Mac or iPad bought from that year will be able to tap on Apple Intelligence. Compare this to Microsoft’s rollout of its Copilot generative AI. It’s available on Bing and Edge, and for subscribers of Microsoft 365, though not for the one-off installed version. Then there is Copilot+ on Windows laptops but these are for a new series of Windows PCs that can perform tasks that other Windows PCs cannot, which technically infers that current laptops aren’t capable nor worth the investment.

And like Google Gemini or Meta AI, the generative model itself becomes the focus of attention, instead of the support it brings, including for things it fails on. Known as AI hallucinations, these errors happen because AI models simply collect information without verification, which is why Google Bard told users to add non-toxic glue to bind cheese on pizza, because it has been referenced sarcastically online. AI generative models are an expanding software model that’s still learning, building and growing, but as massive as the tool itself can be, is it really necessary? It’s like buying a workshop simply because you need to use a screwdriver and Apple Intelligence isn’t meant to be the be-all and end-all of AI – it just needs to be useful with some things.

It’s also free, or rather, users don’t have to pay more for a feature that’s so heavily integrated. There are free versions of Copilot, Gemini and even ChatGPT, but the business model being touted is that generative AI is the new income generator for tech companies. But Apple is rolling it out for free (though some would argue that the Apple ecosystem already has a higher built-in adoption cost).

Apple Intelligence also has one significant advantage over all its competitors, and that is the recorded history between you and your Apple device. Already, your iPhone has your contact list of friends and family, and photos of your family, friends and colleagues. It knows your holidays and travel history, and what your schedule will be from your calendar. With it, you can tell Apple Intelligence to group your holiday photos with your partner, or night out with your friends, or activities within a certain time frame. Meanwhile, Google Bard or Meta AI has no clue as to my children’s names, what they look like, or the photos that were taken in Seoul last year, or at the mall last week.

And for those who argue that Apple Intelligence is merely a subset of real AI offerings, by simplifying AI’s potential, arguments can also be made that AI models are also fractured and don’t offer everything either, simply because of limitations. For more detailed and vibrant AI images, the recommendation isn’t for Microsoft’s model, but to use Midjourney, Dall-E 3 or Stable Diffusion. Given AI hallucinations, the recommendations for using AI for research purposes, complete with citation of sources, are for the likes of Perplexity or Scite – it’s about finding the right tool with the proper offerings that best suit your needs.

This level of intimacy and knowledge might seem scary at first glance, but it is what allows the company its extensive level of offerings, to create Genmoji that look like you, or your friends as a cartoon character, or sift through your library of images. Aside from the integration with ChatGPT, much of Apple’s AI deployment is dedicated to producing an overall impact on the user, and not be as task-focused as the AI touted by Apple’s peers, including Google, Microsoft, and Facebook. It’s also putting in extra efforts on privacy as AI models use existing data to improve, and artists, authors, talents and originators of content are pushing back against companies that are using their works to build a better AI model.

Apple is keeping things simple by putting privacy first and its use of ChatGPT taps on cloud computational capabilities, but under Apple’s own Private Cloud Compute which it can control and manage. In other words, Apple Intelligence can do some of the things other AI models can, with outside help, but it’s also factoring in a new standard for privacy in AI, that other companies might not be so focused on. This means information on your friends and family, which are on your devices, can be used to serve your needs, but it won’t be shared to improve any AI model because that information is yours. Apple also made it very clear that it will not share user data with OpenAI, or allow OpenAI to train its models with user data.

The flip side is that some elements can benefit the majority. Imagine if Apple took all the handwritten notes globally, especially those of doctors, and collectively processed them so that users anywhere can simply take a photo of doctor’s notes, and immediately know what was written down.