Artificial intelligence (AI) is not simply about supporting the able-bodied, but also for those with physical limits as Apple is set to revolutionise accessibility with an array of new features in its upcoming iOS 18 update, by enhancing device interaction for users with disabilities. Announced in conjunction with Global Accessibility Awareness Day on 16 May, these advancements utilise cutting-edge AI and machine learning technology to create a more inclusive user experience.

The highlight of the update is the innovative Eye Tracking technology, which allows users to control their iPhone and iPad using just their eyes. This feature is a significant leap from previous accessibility options, eliminating the need for additional hardware by using the device’s front-facing camera. Eye Tracking enables users to navigate through apps and select items on their screen simply by gazing at them, thanks to sophisticated algorithms that interprets visual focus and intent.

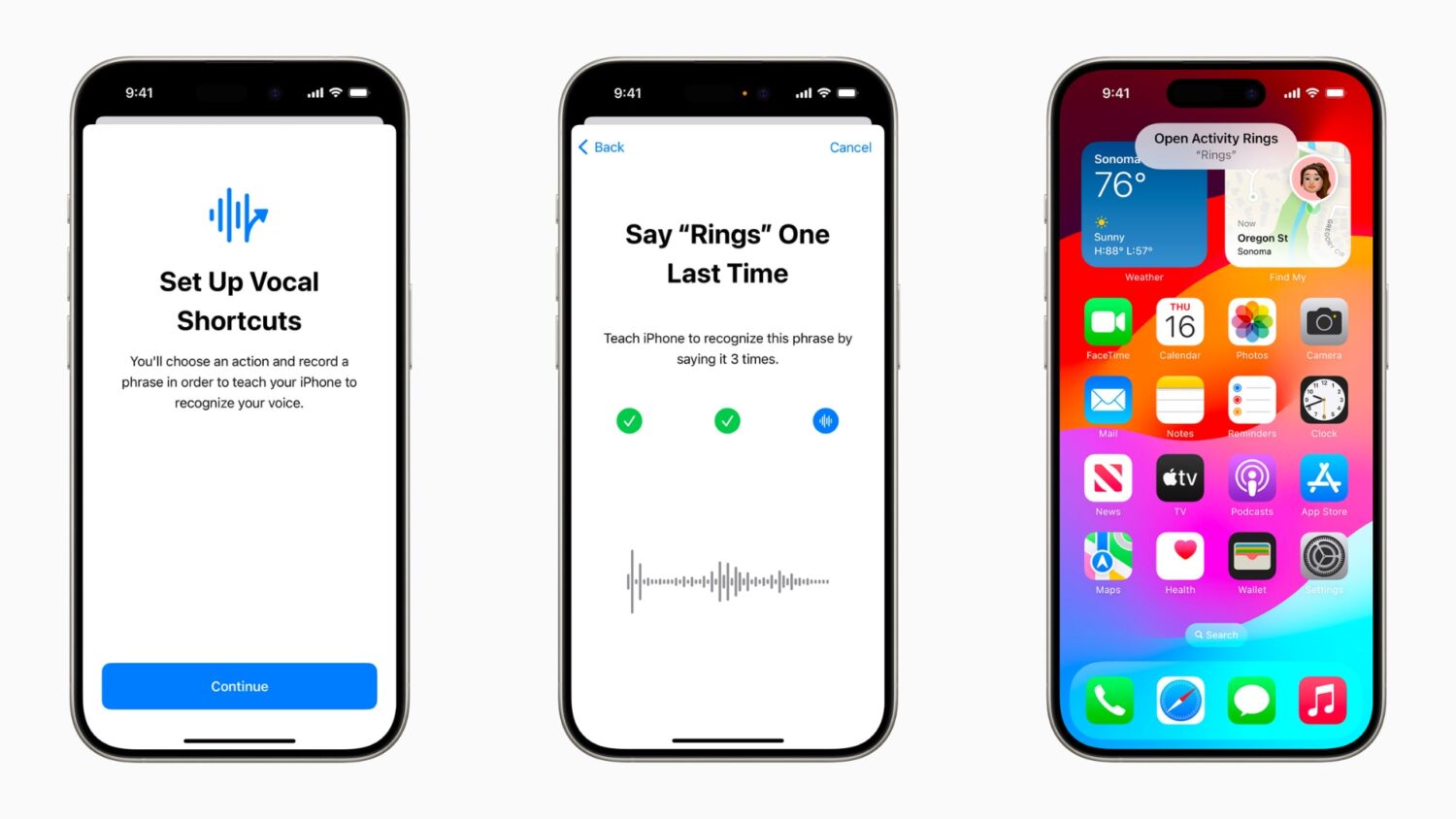

In addition to this visual assist, it is also introducing Vocal Shortcuts, which let users execute commands and access apps with custom voice cues, making interaction more intuitive and personalised, and Listen for Atypical Speech, which improves voice recognition for individuals with speech-affecting conditions like ALS or cerebral palsy, ensuring that their unique speech patterns are understood by their devices.

For the hearing impaired, Apple’s new Music Haptics translates songs into tactile experiences. This feature uses the device’s haptic engine to convey the rhythm and dynamics of music through taps and vibrations, providing a novel way to experience audio content. Music Haptics is compatible with an extensive selection of songs in the Apple Music library and will be open to developers as an API, paving the way for a more inclusive musical experience across apps.

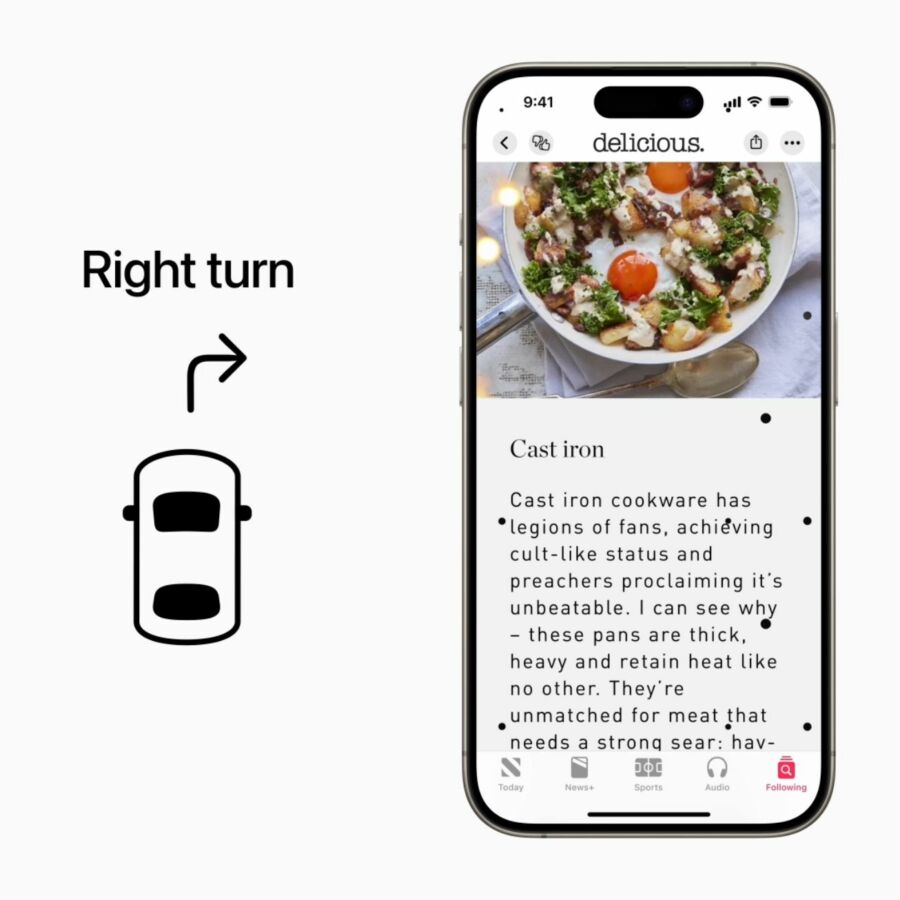

Apple is also tackling motion sickness with its Vehicle Motion Cues, designed to alleviate discomfort when using devices in moving vehicles. This feature uses animated dots that mimic the motion of the vehicle, helping to synchronise visual and physical perceptions and reduce nausea.

Further enhancing its in-car systems, Apple’s CarPlay will see updates such as Voice Control to control apps with just their voice, Color Filters for colourblind users, and Sound Recognition, which alerts drivers and passengers to critical sounds like sirens or horns.

Moreover, visionOS, found on the company’s Apple Vision Pro mixed reality headset, will also support Live Captions during FaceTime calls, aiding users who are deaf or hard of hearing in following conversations more easily. These captions are part of a broader set of updates aimed at enhancing accessibility across Apple’s ecosystem, including support for Made for iPhone hearing devices and adjustments for users sensitive to bright lights or high-contrast visuals.

These new features underscore Apple’s commitment to accessibility, promising to transform how users with diverse needs interact with technology. Slated for release later this year, these enhancements will likely debut at Apple’s annual World Wide Developer Conference (WWDC) in June, marking significant strides in making tech more accessible to everyone.

In celebration of Global Accessibility Awareness Day, Apple is hosting hands-on tech sessions tailored to showcase the accessibility features of its devices. These sessions, available at Apple Stores worldwide, offer insights into how users with various disabilities can harness the technology for easier, more inclusive access to digital spaces.

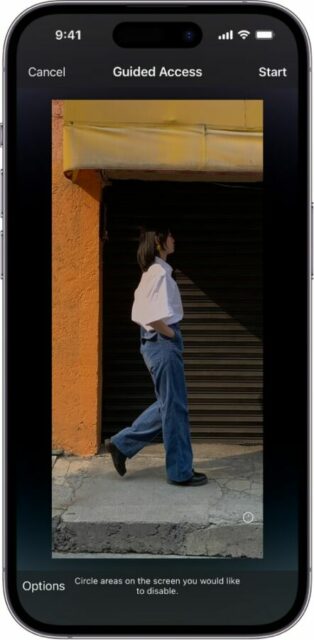

With the Get Started: Learning and Literacy Features session, participants learn to utilise their Mac and iOS devices to support learning and literacy for everyone. Built-in accessibility features across all Apple devices such as Guided Access help maintain focus by limiting device functionality to a single app, making it ideal for educators and caregivers to direct learners’ attention without distractions.

Tools such as Spoken Content, Look Up, Background Sounds, Dictation, and Predictive Text are also explored, enhancing users’ reading and spelling capabilities. These features aim to assist users in connecting, creating, and enjoying what they love without barriers – integral to the company’s ethos of inclusivity.

Spoken Content enables devices to read out text on the screen or provide feedback on what is typed, benefiting users with vision impairments or reading difficulties, while Look Up allows users to instantly define words or phrases right within any app or website. Meanwhile, Dictation and Predictive Text simplify text entry on devices, showcasing Apple’s push towards enhancing user experience through voice recognition and predictive technology.

Other highlighted features include Focus, which helps users stay concentrated or step away from their devices by customising notification settings, and Background Sounds that mask environmental noise, enhancing concentration or relaxation.

The free sessions, part of the Today at Apple hands-on series, underscore Apple’s strategy of inclusive design and demonstrate the practical application of accessibility features but also educate users on setting up and customising these features to suit individual needs. For instance, Guided Access can be configured to disable specific areas of the screen or certain buttons during use, helping users stay focused on the task at hand.

For more information about the new features or to book a spot in these accessibility sessions, visit Apple’s website.